Working with series and time series data in F#

In this section, we look at F# data frame library features that are useful when working

with time series data or, more generally, any ordered series. Although we mainly look at

operations on the Series type, many of the operations can be applied to data frame Frame

containing multiple series. Furthermore, data frame provides an elegant way for aligning and

joining series.

You can also get this page as an F# script file from GitHub and run the samples interactively.

Generating input data

For the purpose of this tutorial, we'll need some input data. For simplicitly, we use the following function which generates random prices using the geometric Brownian motion. The code is adapted from the financial tutorial on Try F#.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: |

|

The implementation of the function is not particularly important for the purpose of this

page, but you can find it in the script file with full source.

Once we have the function, we define a date today (representing today's midnight) and

two helper functions that set basic properties for the randomPrice function.

To get random prices, we now only need to call stock1 or stock2 with TimeSpan and

the required number of prices:

1: 2: 3: |

|

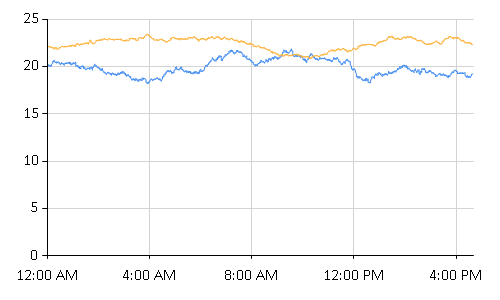

The above snippet generates 1k of prices in one minute intervals and plots them using the F# Charting library. When you run the code and tweak the chart look, you should see something like this:

Data alignment and zipping

One of the key features of the data frame library for working with time series data is

automatic alignment based on the keys. When we have multiple time series with date

as the key (here, we use DateTimeOffset, but any type of date will do), we can combine

multiple series and align them automatically to specified date keys.

To demonstrate this feature, we generate random prices in 60 minute, 30 minute and 65 minute intervals:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: |

|

Zipping time series

Let's first look at operations that are available on the Series<K, V> type. A series

exposes Zip operation that can combine multiple series into a single series of pairs.

This is not as convenient as working with data frames (which we'll see later), but it

is useful if you only need to work with one or two columns without missing values:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: |

|

Using Zip on series is somewhat complicated. The result is a series of tuples, but each

component of the tuple may be missing. To represent this, the library uses the T opt type

(a type alias for OptionalValue<T>). This is not necessary when we use data frame to

work with multiple columns.

Joining data frames

When we store data in data frames, we do not need to use tuples to represent combined values. Instead, we can simply use data frame with multiple columns. To see how this works, let's first create three data frames containing the three series from the previous section:

1: 2: 3: 4: 5: 6: |

|

Similarly to Series<K, V>, the type Frame<R, C> has an instance method Join that can be

used for joining (for unordered) or aligning (for ordered) data. The same operation is also

exposed as Frame.join and Frame.joinAlign functions, but it is usually more convenient to use

the member syntax in this case:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: 29: 30: 31: 32: 33: 34: 35: 36: 37: 38: 39: 40: 41: 42: 43: |

|

The automatic alignment is extremely useful when you have multiple data series with different

offsets between individual observations. You can choose your set of keys (dates) and then easily

align other data to match the keys. Another alternative to using Join explicitly is to create

a new frame with just keys that you are interested in (using Frame.ofRowKeys) and then use

the AddSeries member (or the df?New <- s syntax) to add series. This will automatically left

join the new series to match the current row keys.

When aligning data, you may or may not want to create data frame with missing values. If your

observations do not happen at exact time, then using Lookup.ExactOrSmaller or Lookup.ExactOrGreater

is a great way to avoid mismatch.

If you have observations that happen e.g. at two times faster rate (one series is hourly and

another is half-hourly), then you can create data frame with missing values using Lookup.Exact

(the default value) and then handle missing values explicitly (as discussed here).

Windowing, chunking and pairwise

Windowing and chunking are two operations on ordered series that allow aggregating the values of series into groups. Both of these operations work on consecutive elements, which contrast with grouping that does not use order.

Sliding windows

Sliding window creates windows of certain size (or certain condition). The window "slides" over the input series and provides a view on a part of the series. The key thing is that a single element will typically appear in multiple windows.

1: 2: 3: 4: 5: 6: 7: 8: 9: |

|

The functions used above create window of size 4 that moves from the left to right.

Given input [1,2,3,4,5,6] the this produces the following three windows:

[1,2,3,4], [2,3,4,5] and [3,4,5,6]. By default, the Series.window function

automatically chooses the key of the last element of the window as the key for

the whole window (we'll see how to change this soon):

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: |

|

What if we want to avoid creating <missing> values? One approach is to

specify that we want to generate windows of smaller sizes at the beginning

or at the end of the beginning. This way, we get incomplete windows that look as

[1], [1,2], [1,2,3] followed by the three complete windows shown above:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: |

|

As you can see, the values in the first column are equal, because the first

Mean value is just the average of singleton series.

When you specify Boundary.AtBeginning (this example) or Boundary.Skip

(default value used in the previous example), the function uses the last key

of the window as the key of the aggregated value. When you specify

Boundary.AtEnding, the first key is used, so the values can be nicely

aligned with original values. When you want to specify custom key selector,

you can use a more general function Series.aggregate.

In the previous sample, the code that performs aggregation is no longer

just a simple function like Stats.mean, but a lambda that takes ds,

which is of type DataSegment<T>. This type informs us whether the window

is complete or not. For example:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: |

|

Window size conditions

The previous examples generated windows of fixed size. However, there are two other options for specifying when a window ends.

- The first option is to specify the maximal distance between the first and the last key

- The second option is to specify a function that is called with the first and the last key; a window ends when the function returns false.

The two functions are Series.windowDist and Series.windowWhile (together

with versions suffixed with Into that call a provided function to aggregate

each window):

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: |

|

Chunking series

Chunking is similar to windowing, but it creates non-overlapping chunks, rather than (overlapping) sliding windows. The size of chunk can be specified in the same three ways as for sliding windows (fixed size, distance on keys and condition):

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: |

|

The above examples use various chunking functions in a very similar way, mainly because the randomly generated input is very uniform. However, they all behave differently for inputs with non-uniform keys.

Using chunkSize means that the chunks have the same size, but may correspond

to time series of different time spans. Using chunkDist guarantees that there

is a maximal time span over each chunk, but it does not guarantee when a chunk

starts. That is something which can be achieved using chunkWhile.

Finally, all of the aggregations discussed so far are just special cases of

Series.aggregate which takes a discriminated union that specifies the kind

of aggregation (see API reference).

However, in practice it is more convenient to use the helpers presented here -

in some rare cases, you might need to use Series.aggregate as it provides

a few other options.

Pairwise

A special form of windowing is building a series of pairs containing a current and previous value from the input series (in other words, the key for each pair is the key of the later element). For example:

1: 2: 3: 4: 5: |

|

The pairwise operation always returns a series that has no value for

the first key in the input series. If you want more complex behavior, you

will usually need to replace pairwise with window. For example, you might

want to get a series that contains the first value as the first element,

followed by differences. This has the nice property that summing rows,

starting from the first one gives you the current price:

1: 2: 3: 4: 5: 6: |

|

Sampling and resampling time series

Given a time series with high-frequency prices, sampling or resampling makes it possible to get time series with representative values at lower frequency. The library uses the following terminology:

-

Lookup means that we find values at specified key; if a key is not available, we can look for value associated with the nearest smaller or the nearest greater key.

-

Resampling means that we aggregate values values into chunks based on a specified collection of keys (e.g. explicitly provided times), or based on some relation between keys (e.g. date times having the same date).

-

Uniform resampling is similar to resampling, but we specify keys by providing functions that generate a uniform sequence of keys (e.g. days), the operation also fills value for days that have no corresponding observations in the input sequence.

Finally, the library also provides a few helper functions that are specifically

desinged for series with keys of types DateTime and DateTimeOffset.

Lookup

Given a series hf, you can get a value at a specified key using hf.Get(key)

or using hf |> Series.get key. However, it is also possible to find values

for larger number of keys at once. The instance member for doing this

is hf.GetItems(..). Moreover, both Get and GetItems take an optional

parameter that specifies the behavior when the exact key is not found.

Using the function syntax, you can use Series.getAll for exact key

lookup and Series.lookupAll when you want more flexible lookup:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: |

|

Lookup operations only return one value for each key, so they are useful for quick sampling of large (or high-frequency) data. When we want to calculate a new value based on multiple values, we need to use resampling.

Resampling

Series supports two kinds of resamplings. The first kind is similar to lookup in that we have to explicitly specify keys. The difference is that resampling does not find just the nearest key, but all smaller or greater keys. For example:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: |

|

The second kind of resampling is based on a projection from existing keys in

the series. The operation then collects chunks such that the projection returns

equal keys. This is very similar to Series.groupBy, but resampling assumes

that the projection preserves the ordering of the keys, and so it only aggregates

consequent keys.

The typical scenario is when you have time series with date time information

(here DateTimeOffset) and want to get information for each day (we use

DateTime with empty time to represent dates):

1: 2: 3: 4: 5: 6: 7: 8: |

|

The same operation can be easily implemented using Series.chunkWhile, but as

it is often used in the context of sampling, it is included in the library as a

primitive. Moreover, we'll see that it is closely related to uniform resampling.

Note that the resulting series has different type of keys than the source. The

source has keys DateTimeOffset (representing date with time) while the resulting

keys are of the type returned by the projection (here, DateTime representing just

dates).

Uniform resampling

In the previous section, we looked at resampleEquiv, which is useful if you want

to sample time series by keys with "lower resolution" - for example, sample date time

observations by date. However, the function discussed in the previous section only

generates values for which there are keys in the input sequence - if there is no

observation for an entire day, then the day will not be included in the result.

If you want to create sampling that assigns value to each key in the range specified by the input sequence, then you can use uniform resampling.

The idea is that uniform resampling applies the key projection to the smallest and greatest key of the input (e.g. gets date of the first and last observation) and then it generates all keys in the projected space (e.g. all dates). Then it picks the best value for each of the generated key.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: 29: 30: 31: |

|

To perform the uniform resampling, we need to specify how to project (resampled) keys

from original keys (we return the Date), how to calculate the next key (add 1 day)

and how to fill missing values.

After performing the resampling, we turn the data into a data frame, so that we can

nicely see the results. The individual chunks have the actual observation times as keys,

so we replace those with just integers (using Series.indexOrdinal). The result contains

a simple ordered row of observations for each day.

The important thing is that there is an observation for each day - even for for 10/5/2013

which does not have any corresponding observations in the input. We call the resampling

function with Lookup.ExactOrSmaller, so the value 17.51 is picked from the last observation

of the previous day (Lookup.ExactOrGreater would pick 18.80 and Lookup.Exact would give

us an empty series for that date).

Sampling time series

Perhaps the most common sampling operation that you might want to do is to sample time series

by a specified TimeSpan. Although this can be easily done by using some of the functions above,

the library provides helper functions exactly for this purpose:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: |

|

Calculations and statistics

In the final section of this tutorial, we look at writing some calculations over time series. Many of the functions demonstrated here can be also used on unordered data frames and series.

Shifting and differences

First of all, let's look at functions that we need when we need to compare subsequent values in

the series. We already demonstrated how to do this using Series.pairwise. In many cases,

the same thing can be done using an operation that operates over the entire series.

The two useful functions here are:

Series.diffcalcualtes the difference between current and n-th previous elementSeries.shiftshifts the values of a series by a specified offset

The following snippet illustrates how both functions work:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: |

|

In the above snippet, we first calcluate difference using the Series.diff function.

Then we also show how to do that using Series.shift and binary operator applied

to two series (sample - shift). The following section provides more details.

So far, we also used the functional notation (e.g. sample |> Series.diff 1), but

all operations can be called using the member syntax - very often, this gives you

a shorter syntax. This is also shown in the next few snippets.

Operators and functions

Time series also supports a large number of standard F# functions such as log and abs.

You can also use standard numerical operators to apply some operation to all elements

of the series.

Because series are indexed, we can also apply binary operators to two series. This automatically aligns the series and then applies the operation on corresponding elements.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: |

|

The time series library provides a large number of functions that can be applied in this

way. These include trigonometric functions (sin, cos, ...), rounding functions

(round, floor, ceil), exponentials and logarithms (exp, log, log10) and more.

In general, whenever there is a built-in numerical F# function that can be used on

standard types, the time series library should support it too.

However, what can you do when you write a custom function to do some calculation and want to apply it to all series elements? Let's have a look:

1: 2: 3: 4: 5: 6: 7: 8: |

|

In general, the best way to apply custom functions to all values in a series is to

align the series (using either Series.join or Series.joinAlign) into a single series

containing tuples and then apply Series.mapValues. The library also provides the $ operator

that simplifies the last step - f $ s applies the function f to all values of the series s.

Data frame operations

Finally, many of the time series operations demonstrated above can be applied to entire data frames as well. This is particularly useful if you have data frame that contains multiple aligned time series of similar structure (for example, if you have multiple stock prices or open-high-low-close values for a given stock).

The following snippet is a quick overview of what you can do:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: |

|

namespace FSharp

--------------------

namespace Microsoft.FSharp

namespace FSharp.Data

--------------------

namespace Microsoft.FSharp.Data

Generates price using geometric Brownian motion

- 'seed' specifies the seed for random number generator

- 'drift' and 'volatility' set properties of the price movement

- 'initial' and 'start' specify the initial price and date

- 'span' specifies time span between individual observations

- 'count' is the number of required values to generate

let dt = (span:TimeSpan).TotalDays / 250.0

let driftExp = (drift - 0.5 * pown volatility 2) * dt

let randExp = volatility * (sqrt dt)

((start:DateTimeOffset), initial) |> Seq.unfold (fun (dt, price) ->

let price = price * exp (driftExp + randExp * dist.Sample())

Some((dt, price), (dt + span, price))) |> Seq.take count

type DateTimeOffset =

struct

new : dateTime:DateTime -> DateTimeOffset + 5 overloads

member Add : timeSpan:TimeSpan -> DateTimeOffset

member AddDays : days:float -> DateTimeOffset

member AddHours : hours:float -> DateTimeOffset

member AddMilliseconds : milliseconds:float -> DateTimeOffset

member AddMinutes : minutes:float -> DateTimeOffset

member AddMonths : months:int -> DateTimeOffset

member AddSeconds : seconds:float -> DateTimeOffset

member AddTicks : ticks:int64 -> DateTimeOffset

member AddYears : years:int -> DateTimeOffset

...

end

--------------------

DateTimeOffset ()

DateTimeOffset(dateTime: DateTime) : DateTimeOffset

DateTimeOffset(ticks: int64, offset: TimeSpan) : DateTimeOffset

DateTimeOffset(dateTime: DateTime, offset: TimeSpan) : DateTimeOffset

DateTimeOffset(year: int, month: int, day: int, hour: int, minute: int, second: int, offset: TimeSpan) : DateTimeOffset

DateTimeOffset(year: int, month: int, day: int, hour: int, minute: int, second: int, millisecond: int, offset: TimeSpan) : DateTimeOffset

DateTimeOffset(year: int, month: int, day: int, hour: int, minute: int, second: int, millisecond: int, calendar: Globalization.Calendar, offset: TimeSpan) : DateTimeOffset

type DateTime =

struct

new : ticks:int64 -> DateTime + 10 overloads

member Add : value:TimeSpan -> DateTime

member AddDays : value:float -> DateTime

member AddHours : value:float -> DateTime

member AddMilliseconds : value:float -> DateTime

member AddMinutes : value:float -> DateTime

member AddMonths : months:int -> DateTime

member AddSeconds : value:float -> DateTime

member AddTicks : value:int64 -> DateTime

member AddYears : value:int -> DateTime

...

end

--------------------

DateTime ()

(+0 other overloads)

DateTime(ticks: int64) : DateTime

(+0 other overloads)

DateTime(ticks: int64, kind: DateTimeKind) : DateTime

(+0 other overloads)

DateTime(year: int, month: int, day: int) : DateTime

(+0 other overloads)

DateTime(year: int, month: int, day: int, calendar: Globalization.Calendar) : DateTime

(+0 other overloads)

DateTime(year: int, month: int, day: int, hour: int, minute: int, second: int) : DateTime

(+0 other overloads)

DateTime(year: int, month: int, day: int, hour: int, minute: int, second: int, kind: DateTimeKind) : DateTime

(+0 other overloads)

DateTime(year: int, month: int, day: int, hour: int, minute: int, second: int, calendar: Globalization.Calendar) : DateTime

(+0 other overloads)

DateTime(year: int, month: int, day: int, hour: int, minute: int, second: int, millisecond: int) : DateTime

(+0 other overloads)

DateTime(year: int, month: int, day: int, hour: int, minute: int, second: int, millisecond: int, kind: DateTimeKind) : DateTime

(+0 other overloads)

static member Area : data:seq<#value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Color * ?XTitle:string * ?YTitle:string -> GenericChart

static member Area : data:seq<#key * #value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Color * ?XTitle:string * ?YTitle:string -> GenericChart

static member Bar : data:seq<#value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Color * ?XTitle:string * ?YTitle:string -> GenericChart

static member Bar : data:seq<#key * #value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Color * ?XTitle:string * ?YTitle:string -> GenericChart

static member BoxPlotFromData : data:seq<#key * #seq<'a2>> * ?Name:string * ?Title:string * ?Color:Color * ?XTitle:string * ?YTitle:string * ?Percentile:int * ?ShowAverage:bool * ?ShowMedian:bool * ?ShowUnusualValues:bool * ?WhiskerPercentile:int -> GenericChart (requires 'a2 :> value)

static member BoxPlotFromStatistics : data:seq<#key * #value * #value * #value * #value * #value * #value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Color * ?XTitle:string * ?YTitle:string * ?Percentile:int * ?ShowAverage:bool * ?ShowMedian:bool * ?ShowUnusualValues:bool * ?WhiskerPercentile:int -> GenericChart

static member Bubble : data:seq<#value * #value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Color * ?XTitle:string * ?YTitle:string * ?BubbleMaxSize:int * ?BubbleMinSize:int * ?BubbleScaleMax:float * ?BubbleScaleMin:float * ?UseSizeForLabel:bool -> GenericChart

static member Bubble : data:seq<#key * #value * #value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Color * ?XTitle:string * ?YTitle:string * ?BubbleMaxSize:int * ?BubbleMinSize:int * ?BubbleScaleMax:float * ?BubbleScaleMin:float * ?UseSizeForLabel:bool -> GenericChart

static member Candlestick : data:seq<#value * #value * #value * #value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Color * ?XTitle:string * ?YTitle:string -> CandlestickChart

static member Candlestick : data:seq<#key * #value * #value * #value * #value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Color * ?XTitle:string * ?YTitle:string -> CandlestickChart

...

type TimeSpan =

struct

new : ticks:int64 -> TimeSpan + 3 overloads

member Add : ts:TimeSpan -> TimeSpan

member CompareTo : value:obj -> int + 1 overload

member Days : int

member Duration : unit -> TimeSpan

member Equals : value:obj -> bool + 1 overload

member GetHashCode : unit -> int

member Hours : int

member Milliseconds : int

member Minutes : int

...

end

--------------------

TimeSpan ()

TimeSpan(ticks: int64) : TimeSpan

TimeSpan(hours: int, minutes: int, seconds: int) : TimeSpan

TimeSpan(days: int, hours: int, minutes: int, seconds: int) : TimeSpan

TimeSpan(days: int, hours: int, minutes: int, seconds: int, milliseconds: int) : TimeSpan

static member Chart.FastLine : data:seq<#key * #value> * ?Name:string * ?Title:string * ?Labels:#seq<string> * ?Color:Drawing.Color * ?XTitle:string * ?YTitle:string -> ChartTypes.GenericChart

member Series.Zip : otherSeries:Series<'K,'V2> * kind:JoinKind -> Series<'K,('V opt * 'V2 opt)>

member Series.Zip : otherSeries:Series<'K,'V2> * kind:JoinKind * lookup:Lookup -> Series<'K,('V opt * 'V2 opt)>

| Outer = 0

| Inner = 1

| Left = 2

| Right = 3

| Exact = 1

| ExactOrGreater = 3

| ExactOrSmaller = 5

| Greater = 2

| Smaller = 4

module Frame

from Deedle

--------------------

type Frame =

static member ReadCsv : stream:Stream * hasHeaders:Nullable<bool> * inferTypes:Nullable<bool> * inferRows:Nullable<int> * schema:string * separators:string * culture:string * maxRows:Nullable<int> * missingValues:string [] * preferOptions:Nullable<bool> -> Frame<int,string>

static member ReadCsv : location:string * hasHeaders:Nullable<bool> * inferTypes:Nullable<bool> * inferRows:Nullable<int> * schema:string * separators:string * culture:string * maxRows:Nullable<int> * missingValues:string [] * preferOptions:bool -> Frame<int,string>

static member ReadReader : reader:IDataReader -> Frame<int,string>

static member CustomExpanders : Dictionary<Type,Func<obj,seq<string * Type * obj>>>

static member NonExpandableInterfaces : ResizeArray<Type>

static member NonExpandableTypes : HashSet<Type>

--------------------

type Frame<'TRowKey,'TColumnKey (requires equality and equality)> =

interface IDynamicMetaObjectProvider

interface INotifyCollectionChanged

interface IFsiFormattable

interface IFrame

new : names:seq<'TColumnKey> * columns:seq<ISeries<'TRowKey>> -> Frame<'TRowKey,'TColumnKey>

new : rowIndex:IIndex<'TRowKey> * columnIndex:IIndex<'TColumnKey> * data:IVector<IVector> * indexBuilder:IIndexBuilder * vectorBuilder:IVectorBuilder -> Frame<'TRowKey,'TColumnKey>

member AddColumn : column:'TColumnKey * series:ISeries<'TRowKey> -> unit

member AddColumn : column:'TColumnKey * series:seq<'V> -> unit

member AddColumn : column:'TColumnKey * series:ISeries<'TRowKey> * lookup:Lookup -> unit

member AddColumn : column:'TColumnKey * series:seq<'V> * lookup:Lookup -> unit

...

--------------------

new : names:seq<'TColumnKey> * columns:seq<ISeries<'TRowKey>> -> Frame<'TRowKey,'TColumnKey>

new : rowIndex:Indices.IIndex<'TRowKey> * columnIndex:Indices.IIndex<'TColumnKey> * data:IVector<IVector> * indexBuilder:Indices.IIndexBuilder * vectorBuilder:Vectors.IVectorBuilder -> Frame<'TRowKey,'TColumnKey>

static member Frame.ofColumns : cols:seq<'C * #ISeries<'R>> -> Frame<'R,'C> (requires equality and equality)

member Frame.Join : colKey:'TColumnKey * series:Series<'TRowKey,'V> -> Frame<'TRowKey,'TColumnKey>

member Frame.Join : otherFrame:Frame<'TRowKey,'TColumnKey> * kind:JoinKind -> Frame<'TRowKey,'TColumnKey>

member Frame.Join : colKey:'TColumnKey * series:Series<'TRowKey,'V> * kind:JoinKind -> Frame<'TRowKey,'TColumnKey>

member Frame.Join : otherFrame:Frame<'TRowKey,'TColumnKey> * kind:JoinKind * lookup:Lookup -> Frame<'TRowKey,'TColumnKey>

member Frame.Join : colKey:'TColumnKey * series:Series<'TRowKey,'V> * kind:JoinKind * lookup:Lookup -> Frame<'TRowKey,'TColumnKey>

module Series

from Deedle

--------------------

type Series =

static member ofNullables : values:seq<Nullable<'a0>> -> Series<int,'a0> (requires default constructor and value type and 'a0 :> ValueType)

static member ofObservations : observations:seq<'c * 'd> -> Series<'c,'d> (requires equality)

static member ofOptionalObservations : observations:seq<'K * 'a1 option> -> Series<'K,'a1> (requires equality)

static member ofValues : values:seq<'a> -> Series<int,'a>

--------------------

type Series<'K,'V (requires equality)> =

interface IFsiFormattable

interface ISeries<'K>

new : pairs:seq<KeyValuePair<'K,'V>> -> Series<'K,'V>

new : keys:'K [] * values:'V [] -> Series<'K,'V>

new : keys:seq<'K> * values:seq<'V> -> Series<'K,'V>

new : index:IIndex<'K> * vector:IVector<'V> * vectorBuilder:IVectorBuilder * indexBuilder:IIndexBuilder -> Series<'K,'V>

member After : lowerExclusive:'K -> Series<'K,'V>

member Aggregate : aggregation:Aggregation<'K> * observationSelector:Func<DataSegment<Series<'K,'V>>,KeyValuePair<'TNewKey,OptionalValue<'R>>> -> Series<'TNewKey,'R> (requires equality)

member Aggregate : aggregation:Aggregation<'K> * keySelector:Func<DataSegment<Series<'K,'V>>,'TNewKey> * valueSelector:Func<DataSegment<Series<'K,'V>>,OptionalValue<'R>> -> Series<'TNewKey,'R> (requires equality)

member AsyncMaterialize : unit -> Async<Series<'K,'V>>

...

--------------------

new : pairs:seq<Collections.Generic.KeyValuePair<'K,'V>> -> Series<'K,'V>

new : keys:seq<'K> * values:seq<'V> -> Series<'K,'V>

new : keys:'K [] * values:'V [] -> Series<'K,'V>

new : index:Indices.IIndex<'K> * vector:IVector<'V> * vectorBuilder:Vectors.IVectorBuilder * indexBuilder:Indices.IIndexBuilder -> Series<'K,'V>

static member count : frame:Frame<'R,'C> -> Series<'C,int> (requires equality and equality)

static member count : series:Series<'K,'V> -> int (requires equality)

static member describe : series:Series<'K,'V> -> Series<string,float> (requires equality and equality)

static member expandingCount : series:Series<'K,'V> -> Series<'K,float> (requires equality)

static member expandingKurt : series:Series<'K,'V> -> Series<'K,float> (requires equality)

static member expandingMax : series:Series<'K,'V> -> Series<'K,float> (requires equality)

static member expandingMean : series:Series<'K,'V> -> Series<'K,float> (requires equality)

static member expandingMin : series:Series<'K,'V> -> Series<'K,float> (requires equality)

static member expandingSkew : series:Series<'K,'V> -> Series<'K,float> (requires equality)

static member expandingStdDev : series:Series<'K,'V> -> Series<'K,float> (requires equality)

...

static member Stats.mean : series:Series<'K,'V> -> float (requires equality)

| AtBeginning = 1

| AtEnding = 2

| Skip = 4

union case DataSegment.DataSegment: DataSegmentKind * 'T -> DataSegment<'T>

--------------------

module DataSegment

from Deedle

--------------------

type DataSegment<'T> =

| DataSegment of DataSegmentKind * 'T

override ToString : unit -> string

member Data : 'T

member Kind : DataSegmentKind

type String =

new : value:char -> string + 7 overloads

member Chars : int -> char

member Clone : unit -> obj

member CompareTo : value:obj -> int + 1 overload

member Contains : value:string -> bool

member CopyTo : sourceIndex:int * destination:char[] * destinationIndex:int * count:int -> unit

member EndsWith : value:string -> bool + 2 overloads

member Equals : obj:obj -> bool + 2 overloads

member GetEnumerator : unit -> CharEnumerator

member GetHashCode : unit -> int

...

--------------------

String(value: nativeptr<char>) : String

String(value: nativeptr<sbyte>) : String

String(value: char []) : String

String(c: char, count: int) : String

String(value: nativeptr<char>, startIndex: int, length: int) : String

String(value: nativeptr<sbyte>, startIndex: int, length: int) : String

String(value: char [], startIndex: int, length: int) : String

String(value: nativeptr<sbyte>, startIndex: int, length: int, enc: Text.Encoding) : String

member Clone : unit -> obj

member CopyTo : array:Array * index:int -> unit + 1 overload

member GetEnumerator : unit -> IEnumerator

member GetLength : dimension:int -> int

member GetLongLength : dimension:int -> int64

member GetLowerBound : dimension:int -> int

member GetUpperBound : dimension:int -> int

member GetValue : [<ParamArray>] indices:int[] -> obj + 7 overloads

member Initialize : unit -> unit

member IsFixedSize : bool

...

| Backward = 0

| Forward = 1

member Series.Resample : keys:seq<'K> * direction:Direction * valueSelector:Func<'K,Series<'K,'V>,'c> -> Series<'K,'c>

member Series.Resample : keys:seq<'K> * direction:Direction * valueSelector:Func<'TNewKey,Series<'K,'V>,'R> * keySelector:Func<'K,Series<'K,'V>,'TNewKey> -> Series<'TNewKey,'R> (requires equality)

static member SeriesExtensions.ResampleEquivalence : series:Series<'K,'V> * keyProj:Func<'K,'a2> * aggregate:Func<Series<'K,'V>,'a3> -> Series<'a2,'a3> (requires equality and equality)

DateTimeOffset.Parse(input: string, formatProvider: IFormatProvider) : DateTimeOffset

DateTimeOffset.Parse(input: string, formatProvider: IFormatProvider, styles: Globalization.DateTimeStyles) : DateTimeOffset

static member SeriesExtensions.ResampleUniform : series:Series<'K1,'V> * keyProj:Func<'K1,'K2> * nextKey:Func<'K2,'K2> * fillMode:Lookup -> Series<'K2,'V> (requires equality and comparison)

static member Frame.ofRows : rows:Series<'R,#ISeries<'C>> -> Frame<'R,'C> (requires equality and equality)

static member SeriesExtensions.Sample : series:Series<DateTimeOffset,'V> * interval:TimeSpan -> Series<DateTimeOffset,'V>

static member SeriesExtensions.Sample : series:Series<DateTimeOffset,'V> * interval:TimeSpan * dir:Direction -> Series<DateTimeOffset,'V>

static member SeriesExtensions.Sample : series:Series<DateTimeOffset,'V> * start:DateTimeOffset * interval:TimeSpan * dir:Direction -> Series<DateTimeOffset,'V>

Multiply all numeric columns by a given constant

val float : value:'T -> float (requires member op_Explicit)

--------------------

type float = Double

--------------------

type float<'Measure> = float

static member Stats.sum : series:Series<'K,'V> -> float (requires equality)